One bonus of being a program chair is that you get to have fun with data. In this post I'd like to review two pieces of data, one related to author response and one related to review quality assessment.

tldr: Generally, I think author response is useless, except insofar as it can be cathartic to authors and thereby provide some small psychological benefit. And in general people don't seem that dissatisfied with their papers' reviews, and this is largely independent of the outcome of the paper (conditioned on selection bias of those who responded).

On Author Response

NAACL and other conferences have for some time allowed authors to respond to their reviewers, ostensibly to correct errors, but more often just to argue their position. Most people I've talked to who are in favor of author response say that it made the difference for one paper between it being rejected (pre-response) and accepted (post-response). Of course this is unknowable because decisions aren't made pre-response, so what these authors are reporting is their guess as to accept/reject before response versus actual accept after response. My own internal guesses of accept/reject are often off the mark, so you can imagine I don't find this argument particularly strong. To give a sense as to how hard this prediction is, here is a plot showing whether your paper got accepted or not as a function of the mean overall score.

Here, the x-axis is the cumulative distribution of papers (there were more rejects than accepts, so the length of these is normalized to percentage) and the y-axis is the mean overall score for the paper.

Focusing just on the solid lines (long papers), you can see that there were one or two papers with average scores of 3.6 that got rejected, and several papers with average scores of 3.0 that got accepted. If instead you look at "probability of accept" given mean overall score, basically what you see is papers with a score of 2.8 or lower are almost certainly rejected; with 3.9 or higher are almost certainly accepted, and around 3.2 it's an absolute toss-up.

Now, you might ask: who chose to respond? Perhaps people with clear outcomes elected not to respond at all. Here are the numbers for that:

This is only for long papers (it looks the same for short). Each dot is a paper and red are rejects, blue are accepts; open circles means no response. Definitely people with scores less than 3 responded less frequently than those with scores above 3, but even the top scoring papers all included some response. There were some "hopeful" papers with scores less than 2.0 that responded, even though it was certainly not useful.

But wait, you might say: perhaps those papers with average score or 1.33 gave a response and their final scores went up to 4.5? Ok first of all, these are final scores plotted above, not pre-response scores. But we certainly can look at how scores changed between the initial reviews and the final reviews. (Note this conflates two things: author response and reviewer discussion. We'll get back to that later.)

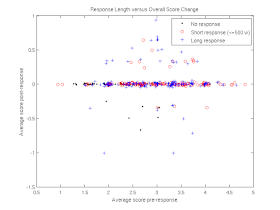

Here, we again have one dot per paper (these are randomly perturbed with small variance so they're all visible). Along the x-axis is the original score of this paper; along the y-axis is how much this score changed between the initial review and the final review. Overall the average absolute change across all papers is 0.1. The vast majority (87%) didn't change at all.

And note: scores were just about as likely to go down as up. (Several scores went down with no response, which I find troublesome, except in cases where reviewers specifically said "I'm giving benefit of the doubt, but need a clarification here.")

You could also say: well, maybe the scores didn't change much, but the review text did. Again, it's pretty rare. Out of 430 reviews (long papers only), 3 reviews decreased in length (~50 words), 46 increased by at most 100 words, and 49 increased by over 100 words (wow, awesome!). But for 80% the review didn't change at all.

Now, getting back to the question of "was this due to AR or to reviewer discussion", this is of course impossible to de-conflate, but we can look at a similar plot as a function of discussion rather than AR:

Here, a few results stand out. First, there are slightly more score changes as a function of discussion than AR. Most of those worrying dots below y=0 that didn't have a response seem to have gone down due to discussions. Moreover, a few scores that went up (on the low-scoring, almost-certainly-rejected papers) did so with no discussion, suggesting that AR did change those scores. But around the x=3.2 position (the true borderlines), almost all of these with score changes had discussion, though plenty had discussion and no score change. As opposed to the x=3.2 position in AR in which they all have responses, and still the majority of scores don't change.

An obvious response at this point is that we still don't know whether the final decision really changed as a function of response. This is a causality question we cannot perform the counterfactual for, so we're not going to get a solid answer. However, we can look at the following:

On the x-axis we have the final score of the paper, and on the y-axis we have (a smoothed version of) the probability that this paper is accepted.

The two nearly identical curves (red and blue) correspond to blue=all long papers and red=all long papers for which the authors submitted any response. In this setting, there really is almost no difference.

The slightly different curve (the black one) corresponds only to papers in which the reviewers engaged in a discussion. Around the critical point (score in the 3-3.5 range), discussion uniformly decreased odds of acceptance, which is of course not surprising for anyone who has ever participated in discussions.

You should take all this with a grain of salt, because all three of these curves are within one standard deviation of the blue curve (dotted lines).

Finally, there's of course the chance that response and discussion are highly correlated: that is, response should perhaps spur discussion. This is probably false (by the above plot), but just to drive the point home, here is the data:

The x-axis is the amount of discussion and the y-axis is the length of the response. See all those dots along x=0? Those are the ones for which the authors responded (in one case with a 2000 word essay!) and for which there was absolutely no discussion. And most of them are rejected papers.

On Review Usefulness

Some of you may recall that we did a post-conference survey of how much you liked the reviews you got. We truly did this at a later point in time because we didn't get our acts together earlier, but now I'll claim we did it at a later point in time so that authors were no longer emotionally distraught by their reviews.

We presented each corresponding author with their old reviews, but hid the scores from them (I doubt any/many went back to look). They were then asked, for each review in turn, how informative it was and how helpful it was. They could choose 0=not, 1=sort of, or 2=very.

The first hypothesis one might have (certainly I had it!) is that people "liked" reviews that were nice to them and "disliked" reviews that were not. (I've heard this called the craigslist effect: if the transaction is successful, everyone's happy; else, everyone's unhappy.) This actually appears not really to be true. Here are the results:

informativeness helpfulness(+- is one standard deviation)

rejects 1.24 +-0.57 1.25 +-0.72

accepts 1.38 +-0.48 1.27 +-0.70

Basically authors of accepted papers didn't particularly find their reviews much more informative (+0.14) or helpful (+0.02) than those of rejected papers.

[Note: the sample size is 110 rejects and 86 accepts, so there was a higher response rate on accepts since the acceptance rate is about 25%.]

You might suspect, however, that how much an author likes a review is correlated with that reviewer's score rather than the overall decision. We can look at that too (recall authors didn't see the scores when completing this survey):

rating avg(informativeness) avg(helpfulness)Here, the first column (rating) is how the author scored the review. The second column is the average overall score that a reviewer gave an author who gave them a score of zero. For example, for reviews that were rated informative=2, the average score for those reviews was 3.21. Again, basically there's no effect.

0 3.04 +- 0.89 3.23 +- 0.87

1 3.22 +- 0.91 3.15 +- 0.93

2 3.21 +- 0.97 3.22 +- 0.96

Just to feel good about ourselves, authors do, in general, seem not-too-unhappy with their reviews.

Here's a histogram of informativeness for rejected papers:

0: ############## (15%)

1: ############################################ (44%)

2: ######################################## (41%)

Here's the same histogram for accepted papers:

0: ######## ( 8%)

1: ############################################## (46%)

2: ############################################## (46%)

The only real difference is that rejected papers did have twice as large a frequency of "informative=0", but the rest was pretty close.(And in case you're wondering, it's basically the same for "helpfulness." As pointed out by a friend, the inability to separate different aspects of the same object is typical when people are asked for intuitive judgements that don't involve reasoning. 70% of responses gave the same score for helpfulness and informativeness and another 29% gave scores within 1. Only 0.8% said inf=2 help=0 and 0.6% said inf=0 and help=2.)

Overall

I think of complaining about review(s|ers) as a staple hallway discussion, sort of an attractor state if you run out of other things to talk about. And we all remember the worst reviews we have had and often forget about the good ones that really do help our work get better. As Lillian Lee said at NAACL this year, it's usually our own fault when reviewers don't understand our papers. And keep in mind that all these numbers have huge randomness associated with them, for instance related to the NIPS experiment.

Despite this, it actually seems that we aren't too dissatisfied with our reviews. Only 11% of reviews were considered uninformative and, while I think we should strive to get this number down, I don't think it's that horrible. Especially when I consider the fact that 19% of papers have an average score of 2.0 or less, which basically means they were submitted not standing a chance (for a wide variety of reasons).

In general I don't think author response has an effect on the outcome that makes it worth the time and energy it takes. It might make authors feel better, but this is temporary when their paper almost certainly enjoys the same fate after response as before. I do think discussion is useful, and I'd rather see us use more time for discussion but cutting out response. Perhaps by reducing the number of papers any given area chair has to deal with. We should realize, though, that discussion almost always serves to lessen the likelihood of acceptance, probably because it's easier to argue against a paper than for, and reviewers don't really have any incentive to stand up for a paper they like, leading to a veto-effect.